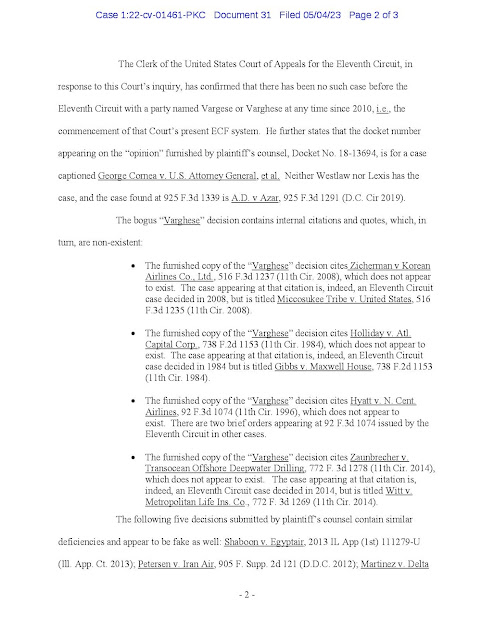

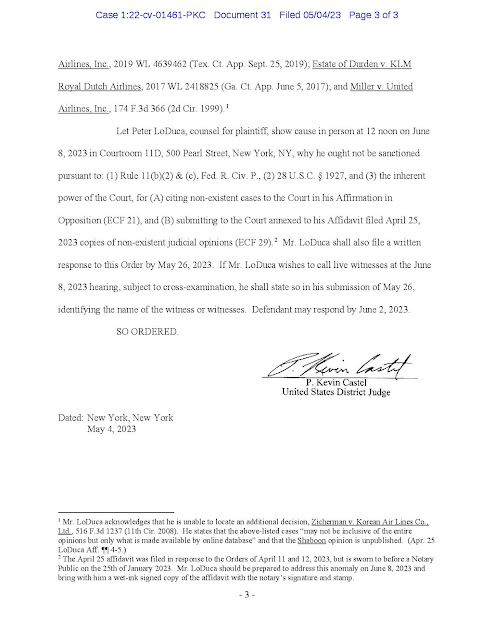

Note to compliance officers, NEVER assume that any legal material, especially citations or extracts from court decisions, that you find through CHAT GPT, or any other similar product, are real, unless you actually find the cases, citations and quotes, using traditional Westlaw or Lexis through conventional legal research techniques. If you cite or quote to a source that turns out to be bogus or fiction created by the program, there will be personal consequences, if the facts show that the cases do not exist.

Read this Order to Show Cause below, and imagine what is most likely going to happen to the lawyer who took a shortcut by depending upon Chat GPT for his legal research, when the scheduled hearing occurs. He may also be liable to his client for professional negligence, also known as malpractice. The case is a personal injury action, filed by an individual who alleges he was hurt during an Avianca flight. What if the lawyer's negligence contribute to the dismissal of the case?

You will note that, more often than not, I actually place the relevant portions of court cases i am reporting at the end of my articles. Compliance officers who are not attorneys may wonder why I do that. It is to provide the reader with proof that what I am reporting on consists of accurate information. Under no circumstances are you to rely upon Chat GPT for any material that you have not personally verified and vetted, if you want to keep your job, and avoid being a defendant in expensive civil litigation.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.